Go Back

Mortgage & Lending Ecosystems.

Mortgage & Lending Ecosystems.

Mortgage & Lending Ecosystems.

Mortgage & Lending Ecosystems.

At Temenos, one of our key challenges was integrating explainable AI into our banking platforms. Clients wanted AI-driven insights, but also needed transparency to build trust and meet compliance requirements.

Role: UX Designer, Innovation Hub — XAI Product Translation & Client Enablement.

I acted as the first point of contact between Temenos’ Explainable AI (XAI) team and the sales organisation—translating complex model behaviour into client-ready narratives, demos, and decision workflows.

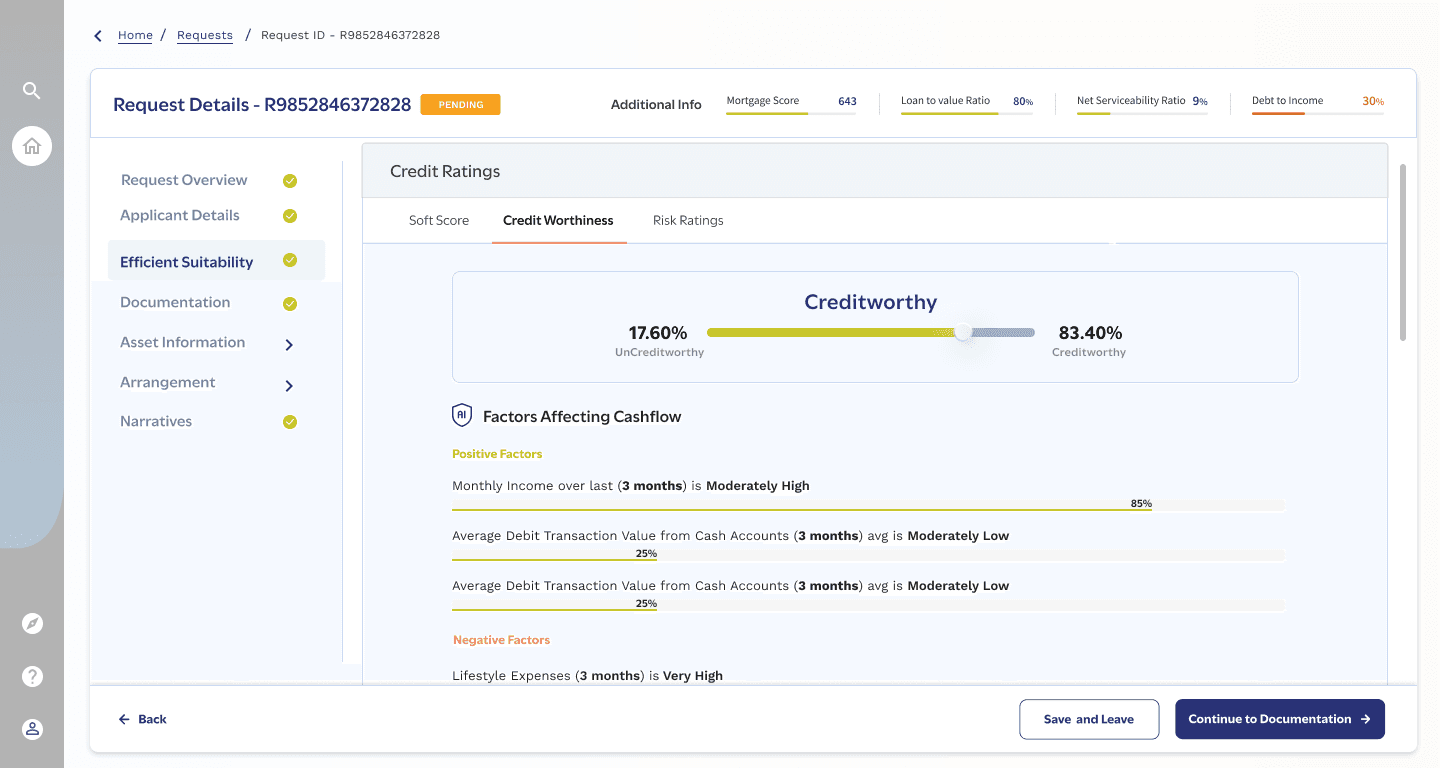

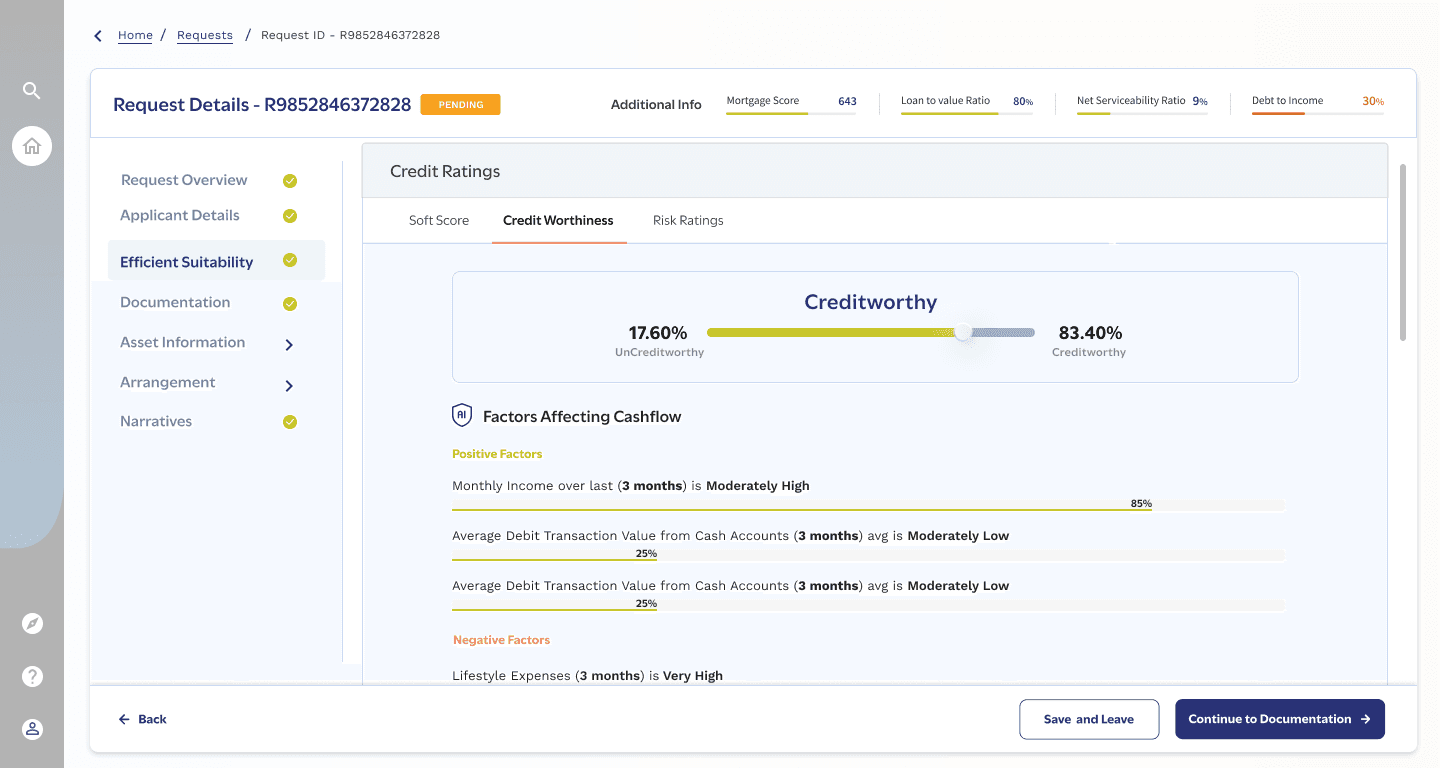

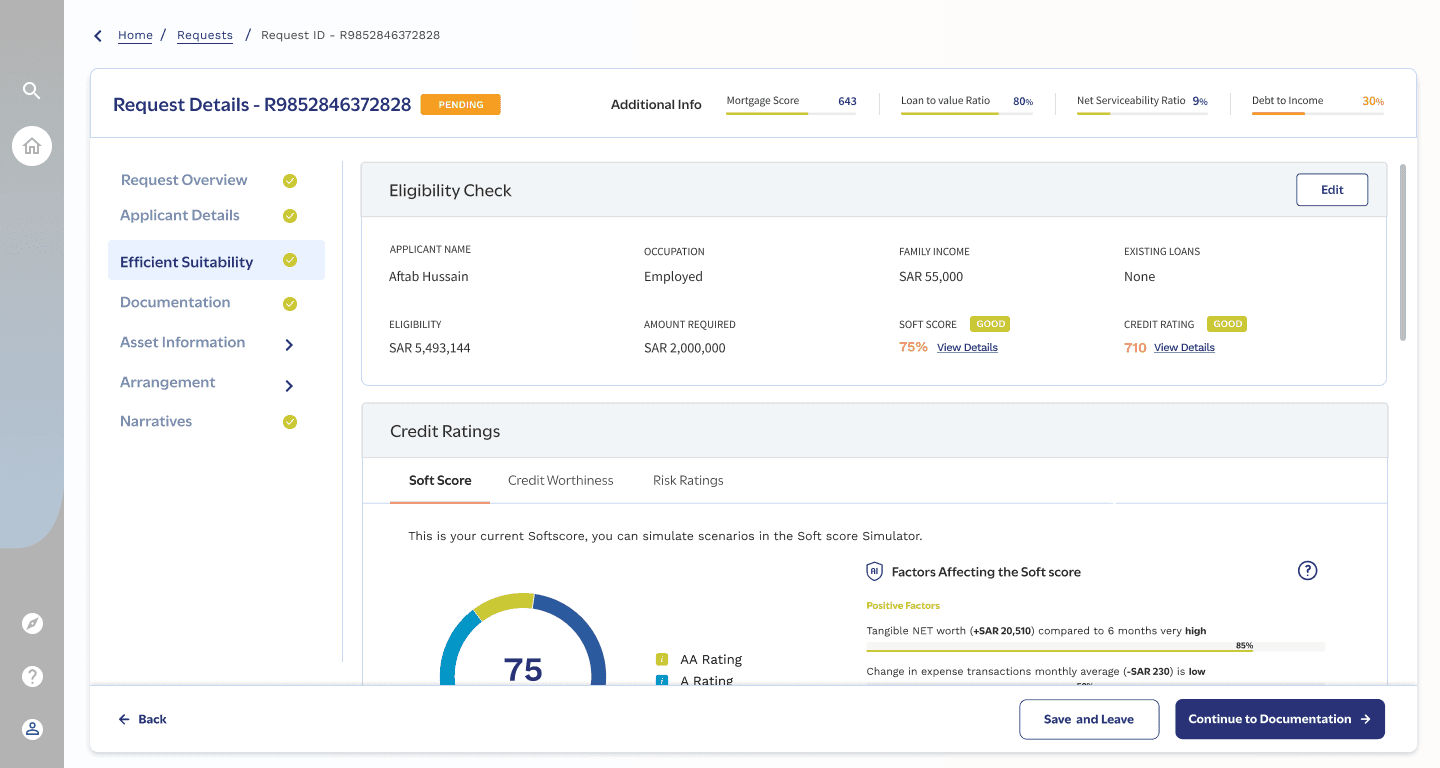

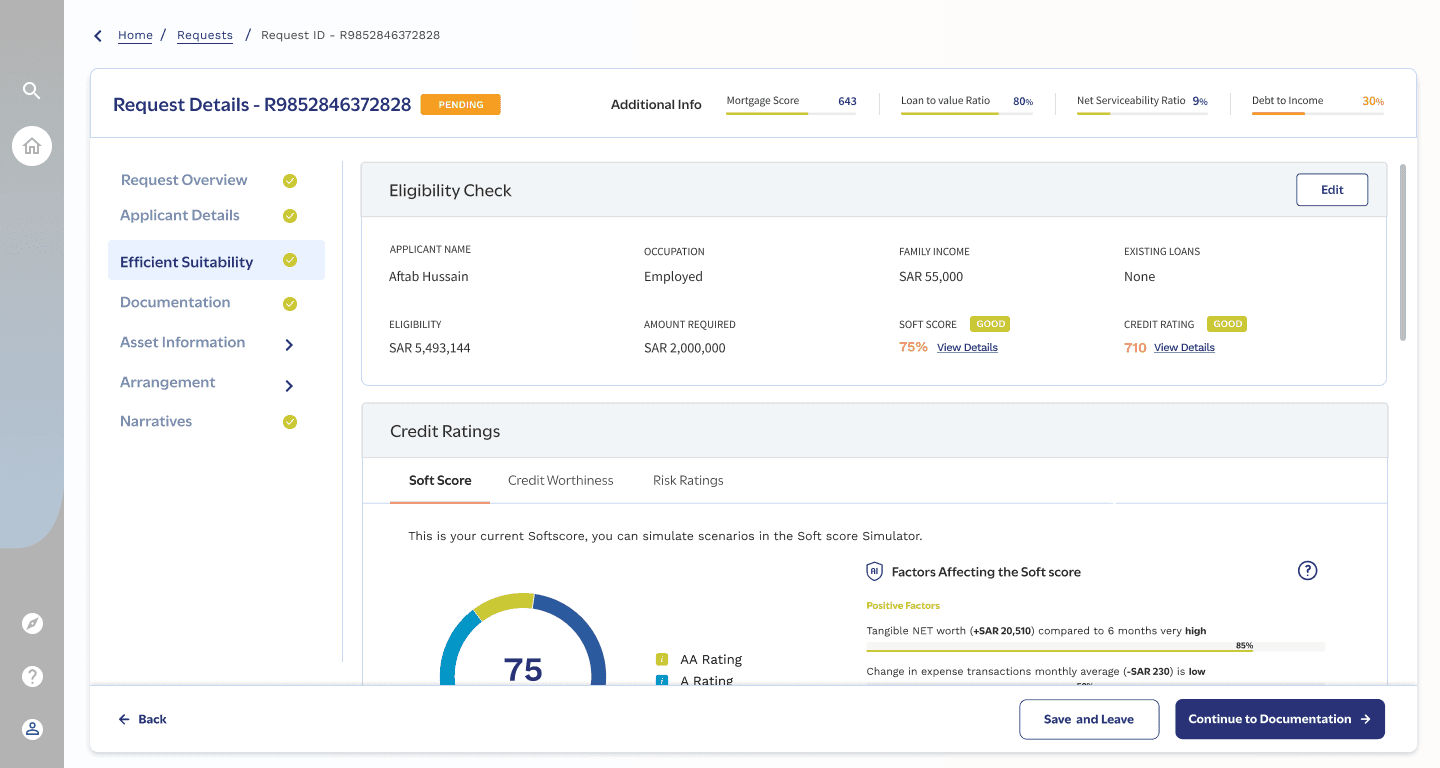

Below is an image of what the interface looked like before XAI was introduced.

At Temenos, one of our key challenges was integrating explainable AI into our banking platforms. Clients wanted AI-driven insights, but also needed transparency to build trust and meet compliance requirements.

Role: UX Designer, Innovation Hub — XAI Product Translation & Client Enablement.

I acted as the first point of contact between Temenos’ Explainable AI (XAI) team and the sales organisation—translating complex model behaviour into client-ready narratives, demos, and decision workflows.

Below is an image of what the interface looked like before XAI was introduced.

At Temenos, one of our key challenges was integrating explainable AI into our banking platforms. Clients wanted AI-driven insights, but also needed transparency to build trust and meet compliance requirements.

Role: UX Designer, Innovation Hub — XAI Product Translation & Client Enablement.

I acted as the first point of contact between Temenos’ Explainable AI (XAI) team and the sales organisation—translating complex model behaviour into client-ready narratives, demos, and decision workflows.

Below is an image of what the interface looked like before XAI was introduced.

At Temenos, one of our key challenges was integrating explainable AI into our banking platforms. Clients wanted AI-driven insights, but also needed transparency to build trust and meet compliance requirements.

Role: UX Designer, Innovation Hub — XAI Product Translation & Client Enablement.

I acted as the first point of contact between Temenos’ Explainable AI (XAI) team and the sales organisation—translating complex model behaviour into client-ready narratives, demos, and decision workflows.

Below is an image of what the interface looked like before XAI was introduced.

Employer:

Employer:

Temenos

Role:

UX Designer

Year:

2023

Problem

For banks evaluating Temenos’ AI-driven mortgage decisioning, the primary concern was whether AI decisions could be trusted, explained, and defended in real regulatory conditions.

From a UX perspective, the interface exposed information but failed to frame decisions—forcing users to manually interpret raw outputs instead of guiding them toward defensible, policy-aligned reasoning.

Problem

For banks evaluating Temenos’ AI-driven mortgage decisioning, the primary concern was whether AI decisions could be trusted, explained, and defended in real regulatory conditions.

From a UX perspective, the interface exposed information but failed to frame decisions—forcing users to manually interpret raw outputs instead of guiding them toward defensible, policy-aligned reasoning.

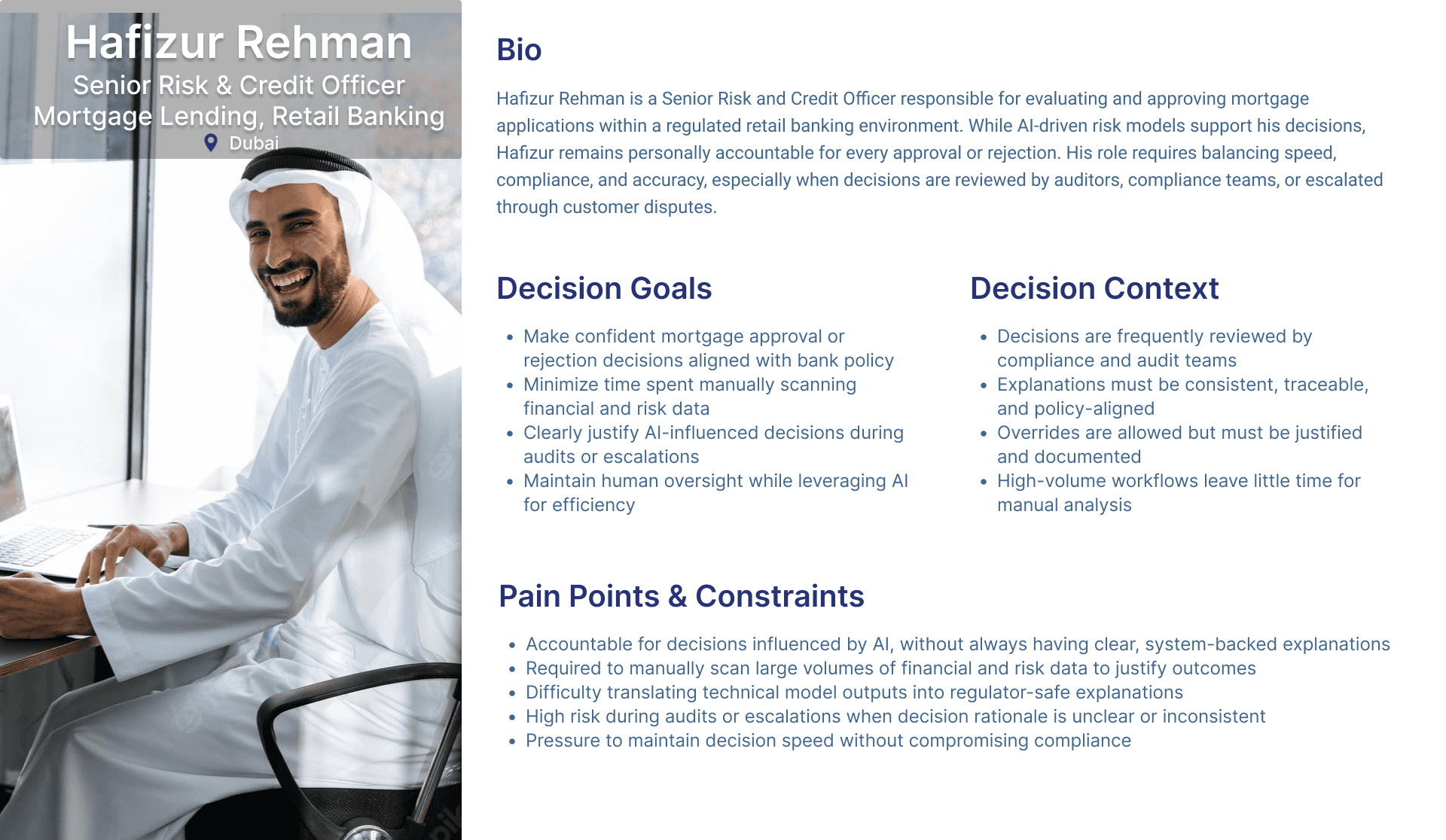

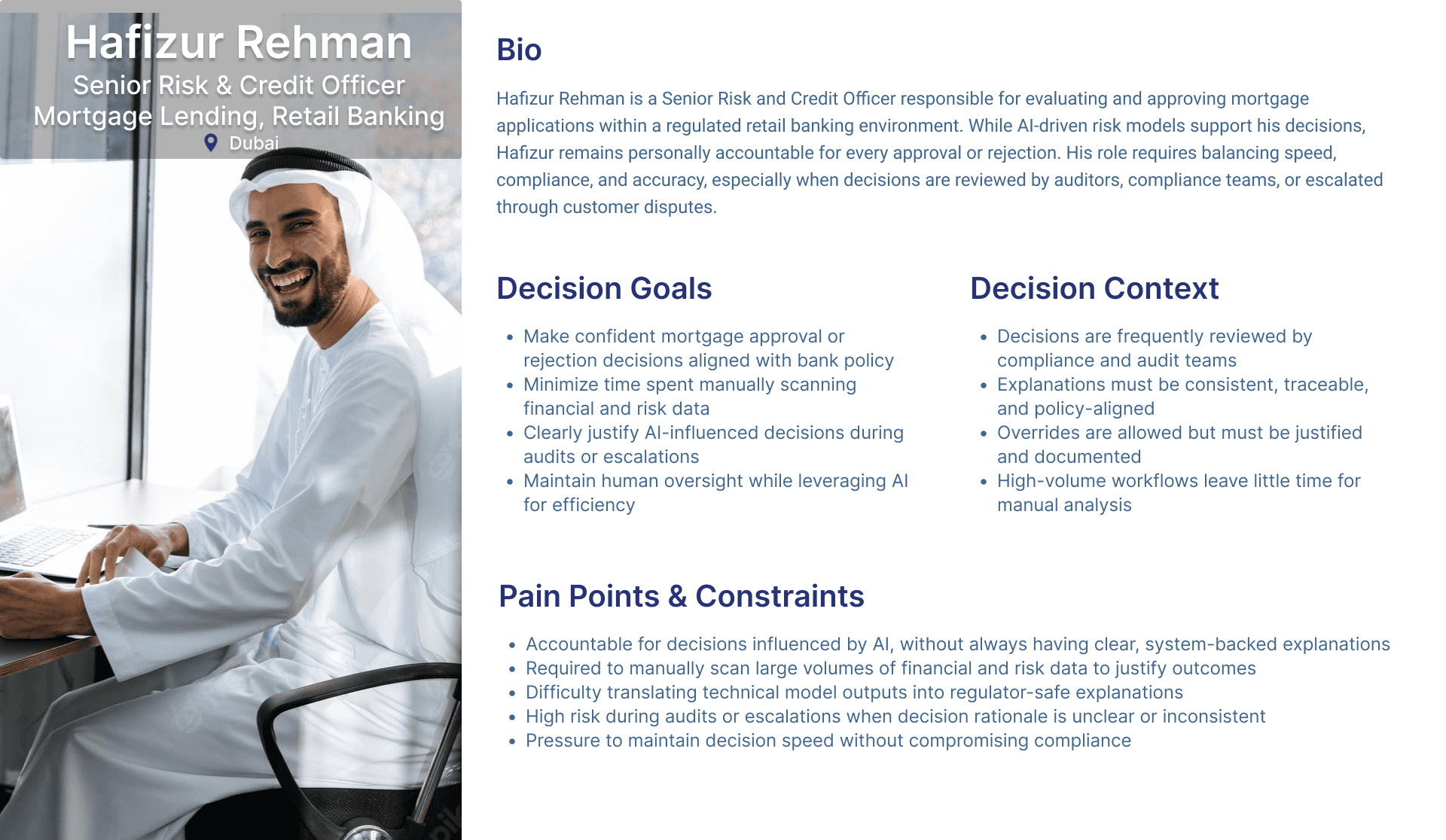

Primary User

Risk and Credit Officers at retail banks responsible for approving or rejecting mortgage applications in high-volume lending environments.

They are accountable not just for speed, but for:

Justifying AI-influenced decisions to auditors and regulators

Explaining outcomes during customer escalations

Ensuring every decision aligns with internal credit policy

They operate under time pressure, but cannot rely on the AI blindly—human oversight is mandatory.

Primary User

Risk and Credit Officers at retail banks responsible for approving or rejecting mortgage applications in high-volume lending environments.

They are accountable not just for speed, but for:

Justifying AI-influenced decisions to auditors and regulators

Explaining outcomes during customer escalations

Ensuring every decision aligns with internal credit policy

They operate under time pressure, but cannot rely on the AI blindly—human oversight is mandatory.

Primary User

Risk and Credit Officers at retail banks responsible for approving or rejecting mortgage applications in high-volume lending environments.

They are accountable not just for speed, but for:

Justifying AI-influenced decisions to auditors and regulators

Explaining outcomes during customer escalations

Ensuring every decision aligns with internal credit policy

They operate under time pressure, but cannot rely on the AI blindly—human oversight is mandatory.

Design Approach

The interface was designed around progressive disclosure to balance two competing requirements: leveraging AI for speed and consistency, while preserving meaningful human intervention where accountability demanded it.

Rather than exposing all model outputs upfront, the design sequenced information to guide users through how and why a decision was made.

At the point of decision, users were presented with:

A soft score summarizing overall risk

XAI-driven explanations highlighting the primary factors influencing the outcome

This enabled risk and credit officers to quickly understand the AI’s reasoning and decide whether to accept the automated recommendation or intervene, without being forced into manual analysis by default.

Additional financial data, model inputs, and supporting evidence were progressively revealed only when required, allowing users to:

Validate or challenge the AI decision

Satisfy audit and compliance requirements

Apply human judgment without undermining automation benefits

By structuring the experience this way, the system avoided false automation and unnecessary manual override—supporting automation where confidence was high, and human oversight where risk justified it.

Design Approach.

The interface was designed around progressive disclosure to balance two competing requirements: leveraging AI for speed and consistency, while preserving meaningful human intervention where accountability demanded it.

Rather than exposing all model outputs upfront, the design sequenced information to guide users through how and why a decision was made.

At the point of decision, users were presented with:

A soft score summarizing overall risk

XAI-driven explanations highlighting the primary factors influencing the outcome

This enabled risk and credit officers to quickly understand the AI’s reasoning and decide whether to accept the automated recommendation or intervene, without being forced into manual analysis by default.

Additional financial data, model inputs, and supporting evidence were progressively revealed only when required, allowing users to:

Validate or challenge the AI decision

Satisfy audit and compliance requirements

Apply human judgment without undermining automation benefits

By structuring the experience this way, the system avoided false automation and unnecessary manual override—supporting automation where confidence was high, and human oversight where risk justified it.

Design Approach

The interface was designed around progressive disclosure to balance two competing requirements: leveraging AI for speed and consistency, while preserving meaningful human intervention where accountability demanded it.

Rather than exposing all model outputs upfront, the design sequenced information to guide users through how and why a decision was made.

At the point of decision, users were presented with:

A soft score summarizing overall risk

XAI-driven explanations highlighting the primary factors influencing the outcome

This enabled risk and credit officers to quickly understand the AI’s reasoning and decide whether to accept the automated recommendation or intervene, without being forced into manual analysis by default.

Additional financial data, model inputs, and supporting evidence were progressively revealed only when required, allowing users to:

Validate or challenge the AI decision

Satisfy audit and compliance requirements

Apply human judgment without undermining automation benefits

By structuring the experience this way, the system avoided false automation and unnecessary manual override—supporting automation where confidence was high, and human oversight where risk justified it.

Design Approach

The interface was designed around progressive disclosure to balance two competing requirements: leveraging AI for speed and consistency, while preserving meaningful human intervention where accountability demanded it.

Rather than exposing all model outputs upfront, the design sequenced information to guide users through how and why a decision was made.

At the point of decision, users were presented with:

A soft score summarizing overall risk

XAI-driven explanations highlighting the primary factors influencing the outcome

This enabled risk and credit officers to quickly understand the AI’s reasoning and decide whether to accept the automated recommendation or intervene, without being forced into manual analysis by default.

Additional financial data, model inputs, and supporting evidence were progressively revealed only when required, allowing users to:

Validate or challenge the AI decision

Satisfy audit and compliance requirements

Apply human judgment without undermining automation benefits

By structuring the experience this way, the system avoided false automation and unnecessary manual override—supporting automation where confidence was high, and human oversight where risk justified it.

My Role

Anchored the process in a clear problem:

risk and credit officers had to manually scan large volumes of data and interpret technical AI outputs to justify mortgage decisions, resulting in slower evaluations and inconsistent explanations.Mapped lender-side decision workflows to understand how risk officers reviewed applications, where they paused to assess risk, and when explanations were required for compliance, audits, or escalations, revealing points where the interface overloaded users with raw data instead of supporting accountability.

Introduced progressive disclosure to align information density with the natural analysis flow, surfacing decision-critical signals such as soft scores and XAI-driven explanations first, while keeping detailed financial data and model inputs accessible for deeper, policy-aligned review.

Explored and prototyped XAI integration within the mortgage flow, working with stakeholders to translate explainable AI capabilities into clear, decision-grade explanations that enhanced transparency without exposing sensitive logic.

Designed at a system level, mapping interactions across users, workflows, and compliance logic to ensure solutions respected platform constraints, regulatory requirements, and Temenos Design System standards.

Collaborated across functions, partnering with product specialists, sales teams, UX designers, and engineers to translate complex financial workflows into intuitive, technically feasible experiences.

My Role

Mapped lender-side decision workflows to understand how risk officers reviewed applications, where they paused to assess risk, and when explanations were required for compliance, audits, or escalations, revealing points where the interface overloaded users with raw data instead of supporting accountability.

Anchored the process in a clear problem:

risk and credit officers had to manually scan large volumes of data and interpret technical AI outputs to justify mortgage decisions, resulting in slower evaluations and inconsistent explanations.Introduced progressive disclosure to align information density with the natural analysis flow, surfacing decision-critical signals such as soft scores and XAI-driven explanations first, while keeping detailed financial data and model inputs accessible for deeper, policy-aligned review.

Explored and prototyped XAI integration within the mortgage flow, working with stakeholders to translate explainable AI capabilities into clear, decision-grade explanations that enhanced transparency without exposing sensitive logic.

Designed at a system level, mapping interactions across users, workflows, and compliance logic to ensure solutions respected platform constraints, regulatory requirements, and Temenos Design System standards.

Collaborated across functions, partnering with product specialists, sales teams, UX designers, and engineers to translate complex financial workflows into intuitive, technically feasible experiences.

My Role

Mapped lender-side decision workflows to understand how risk officers reviewed applications, where they paused to assess risk, and when explanations were required for compliance, audits, or escalations, revealing points where the interface overloaded users with raw data instead of supporting accountability.

Anchored the process in a clear problem:

risk and credit officers had to manually scan large volumes of data and interpret technical AI outputs to justify mortgage decisions, resulting in slower evaluations and inconsistent explanations.Introduced progressive disclosure to align information density with the natural analysis flow, surfacing decision-critical signals such as soft scores and XAI-driven explanations first, while keeping detailed financial data and model inputs accessible for deeper, policy-aligned review.

Explored and prototyped XAI integration within the mortgage flow, working with stakeholders to translate explainable AI capabilities into clear, decision-grade explanations that enhanced transparency without exposing sensitive logic.

Designed at a system level, mapping interactions across users, workflows, and compliance logic to ensure solutions respected platform constraints, regulatory requirements, and Temenos Design System standards.

Collaborated across functions, partnering with product specialists, sales teams, UX designers, and engineers to translate complex financial workflows into intuitive, technically feasible experiences.

My Role

Anchored the process in a clear problem:

risk and credit officers had to manually scan large volumes of data and interpret technical AI outputs to justify mortgage decisions, resulting in slower evaluations and inconsistent explanations.Mapped lender-side decision workflows to understand how risk officers reviewed applications, where they paused to assess risk, and when explanations were required for compliance, audits, or escalations, revealing points where the interface overloaded users with raw data instead of supporting accountability.

Introduced progressive disclosure to align information density with the natural analysis flow, surfacing decision-critical signals such as soft scores and XAI-driven explanations first, while keeping detailed financial data and model inputs accessible for deeper, policy-aligned review.

Explored and prototyped XAI integration within the mortgage flow, working with stakeholders to translate explainable AI capabilities into clear, decision-grade explanations that enhanced transparency without exposing sensitive logic.

Designed at a system level, mapping interactions across users, workflows, and compliance logic to ensure solutions respected platform constraints, regulatory requirements, and Temenos Design System standards.

Collaborated across functions, partnering with product specialists, sales teams, UX designers, and engineers to translate complex financial workflows into intuitive, technically feasible experiences.

Trade offs

Automation vs human oversight

AI insights were designed to support, not replace, risk officers’ judgment, preserving the ability to review, question, and override decisions in regulated contexts.Clarity vs Transparency

We avoided exposing all model inputs upfront, choosing to surface decision-critical insights first while keeping full data accessible on demand, reducing cognitive load without limiting auditability.Explainability vs regulator safety

Instead of exposing detailed model logic, explanations were abstracted into policy-aligned language that could be safely reused in audits and compliance reviews.Speed vs depth of analysis

Explanations were integrated at natural decision points to support fast evaluations, with deeper analysis available only when required for complex or edge cases.

Trade offs

Automation vs human oversight

AI insights were designed to support, not replace, risk officers’ judgment, preserving the ability to review, question, and override decisions in regulated contexts.Clarity vs Transparency

We avoided exposing all model inputs upfront, choosing to surface decision-critical insights first while keeping full data accessible on demand, reducing cognitive load without limiting auditability.Explainability vs regulator safety

Instead of exposing detailed model logic, explanations were abstracted into policy-aligned language that could be safely reused in audits and compliance reviews.Speed vs depth of analysis

Explanations were integrated at natural decision points to support fast evaluations, with deeper analysis available only when required for complex or edge cases.

Trade offs

Automation vs human oversight

AI insights were designed to support, not replace, risk officers’ judgment, preserving the ability to review, question, and override decisions in regulated contexts.Clarity vs Transparency

We avoided exposing all model inputs upfront, choosing to surface decision-critical insights first while keeping full data accessible on demand, reducing cognitive load without limiting auditability.Explainability vs regulator safety

Instead of exposing detailed model logic, explanations were abstracted into policy-aligned language that could be safely reused in audits and compliance reviews.Speed vs depth of analysis

Explanations were integrated at natural decision points to support fast evaluations, with deeper analysis available only when required for complex or edge cases.

Trade offs

Automation vs human oversight

AI insights were designed to support, not replace, risk officers’ judgment, preserving the ability to review, question, and override decisions in regulated contexts.Clarity vs Transparency

We avoided exposing all model inputs upfront, choosing to surface decision-critical insights first while keeping full data accessible on demand, reducing cognitive load without limiting auditability.Explainability vs regulator safety

Instead of exposing detailed model logic, explanations were abstracted into policy-aligned language that could be safely reused in audits and compliance reviews.Speed vs depth of analysis

Explanations were integrated at natural decision points to support fast evaluations, with deeper analysis available only when required for complex or edge cases.

Impact

The new designs were showcased at Temenos’ annual customer event and received strong positive feedback, especially from clients in wealth management and corporate banking, where trust and compliance are critical. More importantly, it positioned Temenos as one of the first SaaS banking providers to offer explainability as a built-in UX feature, which accelerated adoption of AI-driven features among risk-averse institutions.

Impact

The new designs were showcased at Temenos’ annual customer event and received strong positive feedback, especially from clients in wealth management and corporate banking, where trust and compliance are critical. More importantly, it positioned Temenos as one of the first SaaS banking providers to offer explainability as a built-in UX feature, which accelerated adoption of AI-driven features among risk-averse institutions.

Impact

The new designs were showcased at Temenos’ annual customer event and received strong positive feedback, especially from clients in wealth management and corporate banking, where trust and compliance are critical. More importantly, it positioned Temenos as one of the first SaaS banking providers to offer explainability as a built-in UX feature, which accelerated adoption of AI-driven features among risk-averse institutions.

Impact

The new designs were showcased at Temenos’ annual customer event and received strong positive feedback, especially from clients in wealth management and corporate banking, where trust and compliance are critical. More importantly, it positioned Temenos as one of the first SaaS banking providers to offer explainability as a built-in UX feature, which accelerated adoption of AI-driven features among risk-averse institutions.